Evaluate predictions

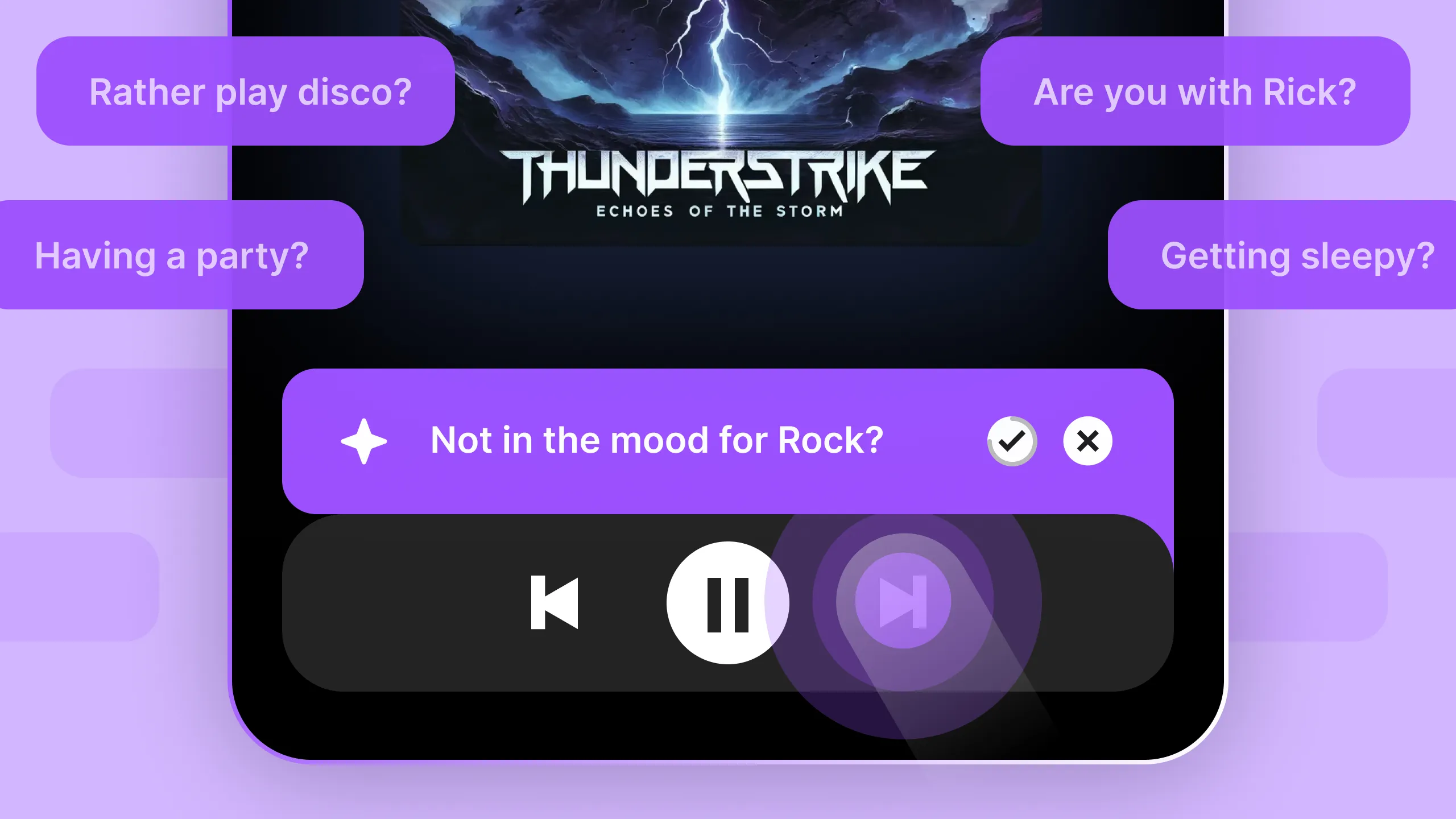

When an observation is added to the context from an implicit action and a prediction is made, users should be able to easily evaluate and dismiss it.

When an observation is added to the context of the AI system or a conclusion is reached, I want to evaluate and dismiss it easily, so I can ensure the information is accurate and relevant to my needs.

- Transparency in Knowledge Gathering: Make it easy for users to understand the factors that influence and shape the knowledge being gathered. When new information is added to the context, clearly communicate this to the user.

- Control over Assumptions: Provide a simple way for users to dismiss or challenge assumptions to ensure the accuracy and reliability of the knowledge base.

More of the Witlist

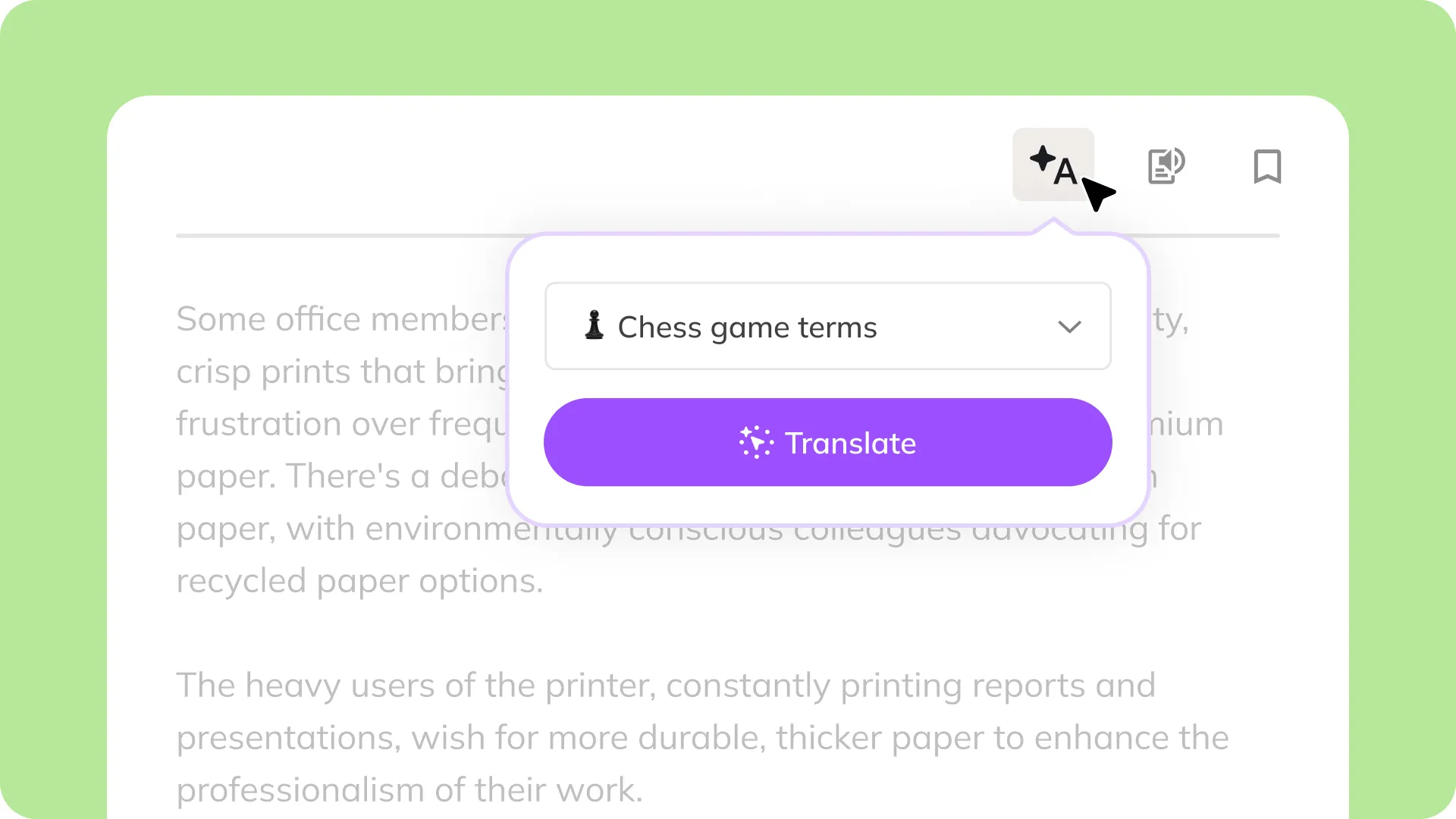

Provide relatable and engaging translations for people with varying levels of expertise, experience and ways of thinking.

Guide users to understand what makes a good prompt will help them learn how to craft prompts that result in better outputs.

An intelligent assistant that analyzes emails to identify questions and feedback requests, providing pre-generated response options and converting them into complete and contextually appropriate replies.

Using the source input as ground truth will help trust the system and makes it easy to interpret its process and what might have gone wrong.

Generative AI can provide custom types of input beyond just text, like generated UI elements, to enhance user interaction.

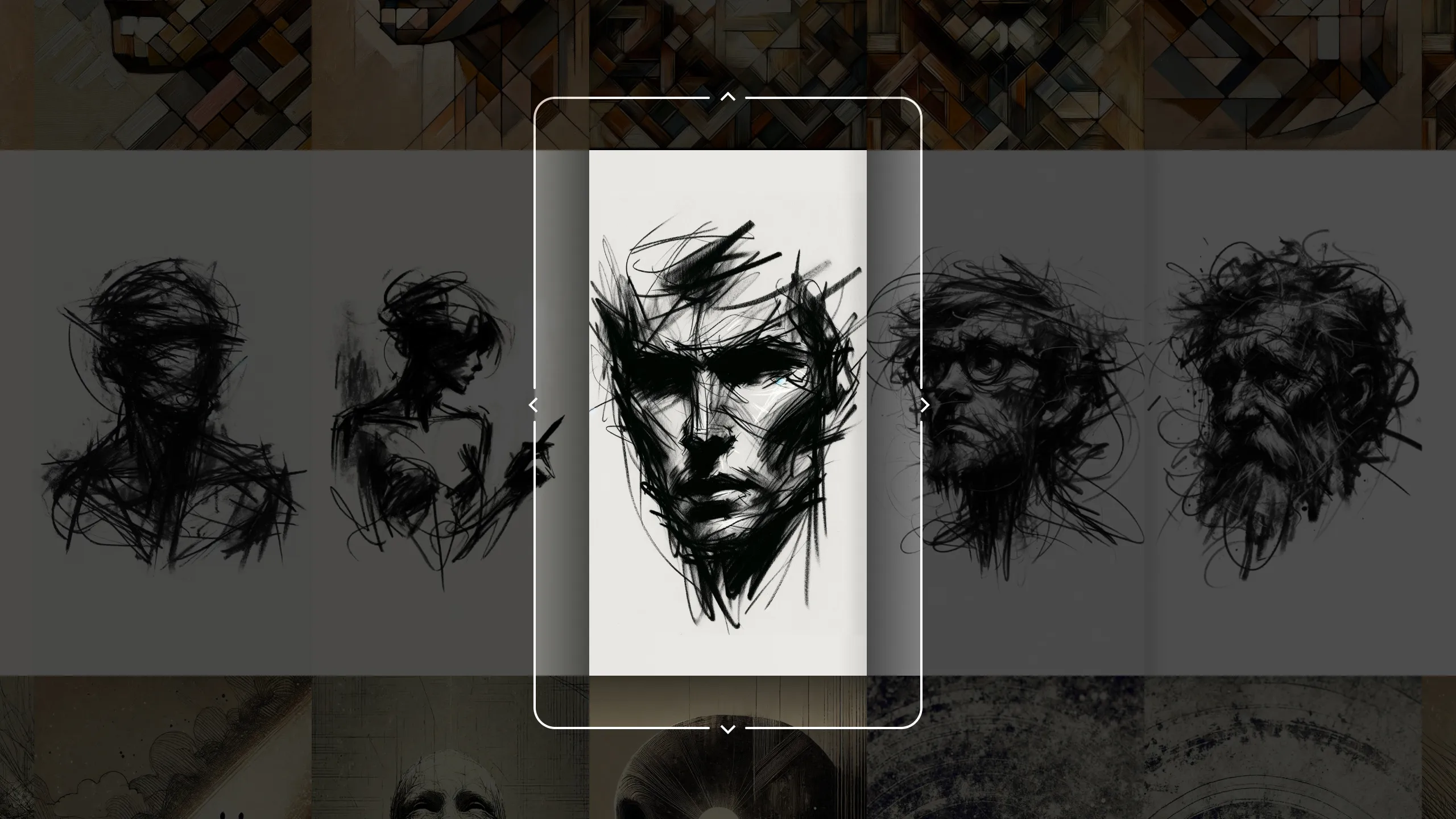

Ordering content along different interpretable dimensions, like style or similarity, makes it navigable on x and y axes facilitating exploration and discovery of relationships between the data.