Substantiated findings

LLM’s are great at organizing narratives and findings. It's helpful to see the sources that support these conclusions, making it easier to understand the analysis and where it comes from.

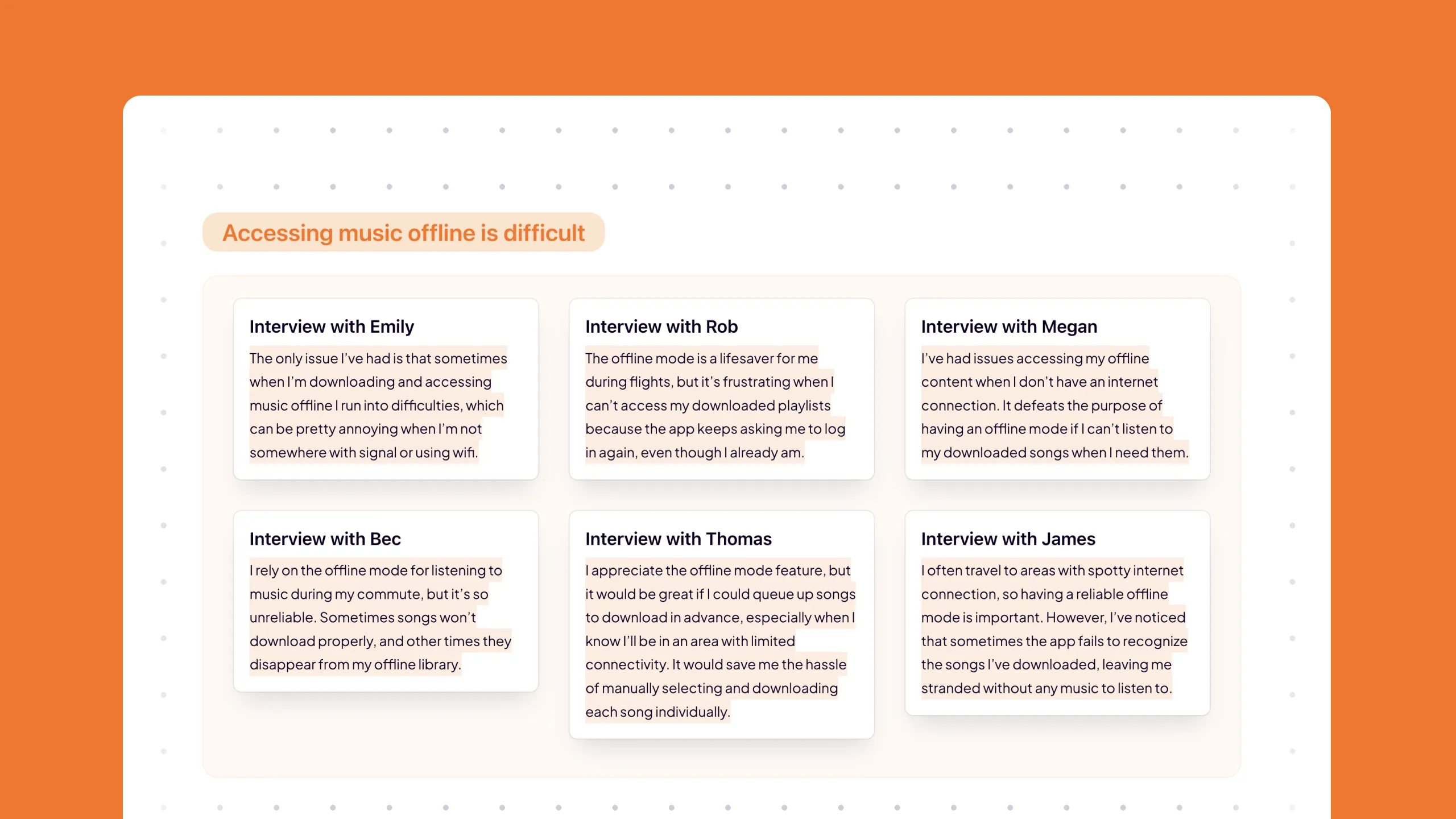

When reviewing findings, I want to see the supporting sources, so I can understand and trust the conclusions more easily, and see how ideas are substantiated.

- Comparing Similarity in Source Materials: Seeing a collection of similar information helps you compare similarity between the source materials.

- Building Trust through Access to Original Sources: Access to original sources builds trust in the findings, as users can review and understand the basis of the conclusions.

More of the Witlist

Generative AI can provide custom types of input beyond just text, like generated UI elements, to enhance user interaction.

Proactive agents can autonomously initiate conversations and actions based on previous interactions and context providing timely and relevant assistance.

Referencing nested data from your database in the form of tags can simplify the creation of elaborate prompt formulas.

Generating multiple outputs and iteratively using selected ones as new inputs helps people uncover ideas and solutions, even without clear direction.

AI collaboration agents can act as writing partners that assist people by enhancing their content through transparent, easily understandable suggestions, while respecting the original input.

Presenting multiple outputs helps users explore and identify their preferences and provides valuable insights into their choices, even enabling user feedback for model improvement.